The best user experience insights (and also the most exciting ones) are discovered by following a trail of clues.

Murder mysteries are generally not solved the instant the detective arrives at the crime scene. The case is not closed using only eye-witness interviews or solely through forensic evidence. At the crime scene, we can confirm one fact: someone died. We may even be able to deduce how. But it likely will not be obvious why. Or how long. Or by whose hand.

The forensics team looks at the evidence (behavioral data) to elicit facts. They share their findings with the detective, who formulates ideas and hypotheses. The detective canvases the area talking to people (attitudinal data). The cycle between analyzing evidence and gathering statements may or may not repeat depending on the circumstance, but in a good murder mystery, there are twists and turns that force the detective to re-evaluate the facts over and over, again.

Here’s an example of a recent UX research experience that followed this pattern. Our client wanted to evaluate and, if necessary, improve their site search experience.

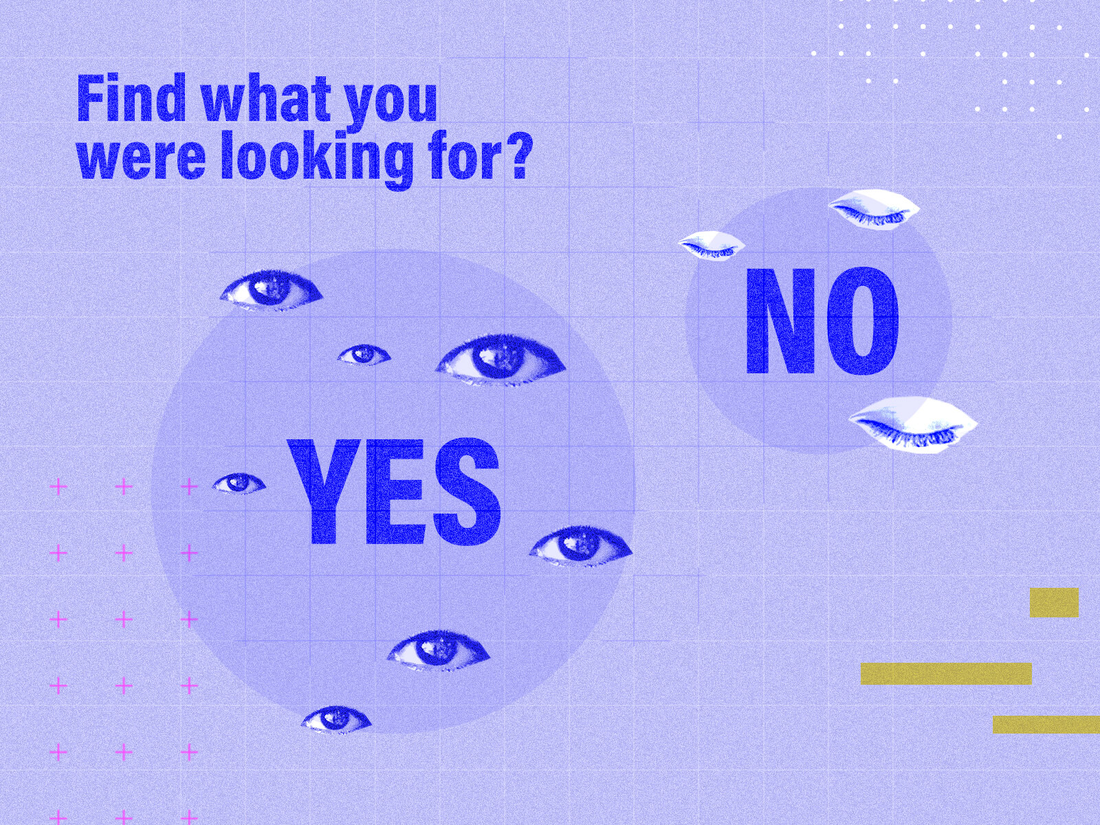

We decided to ask the users of site search how they felt search was performing. So, we launched a VOC (voice of the consumer) micro-survey on the site search results page asking:

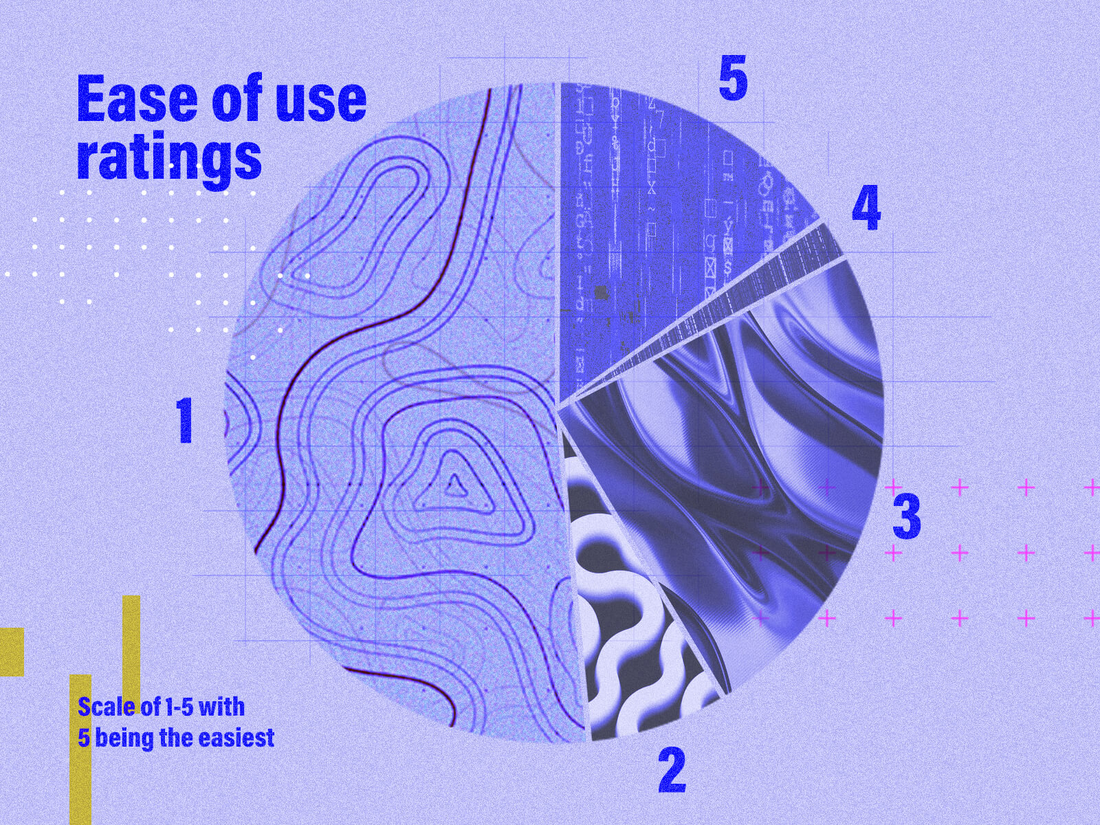

Scale of 1-5 with 5 being the easiest: 1-(51%), 2-(7%), 3-(24%), 4-(2%), 5-(15%)

Scale of 1-5 with 5 being the easiest: 1-(51%), 2-(7%), 3-(24%), 4-(2%), 5-(15%)Even without a huge number of responses, we were able to extract some themes. According to users, search was performing poorly.

Conclusion: Real users validated that something is going wrong with the site search experience and requires further investigation.

We also included an open-ended, optional question to the end of the survey: How can we make this page a better experience for you?

We looked at these responses and categorized them into topics. Turns out, three search terms were mentioned with some level of frequency. Our first clue!

Conclusion: There is a pattern (specific search terms) around where people are encountering friction.

In other words, can we extrapolate these experiences to a significant number of users and thus, consider it a priority to fix?

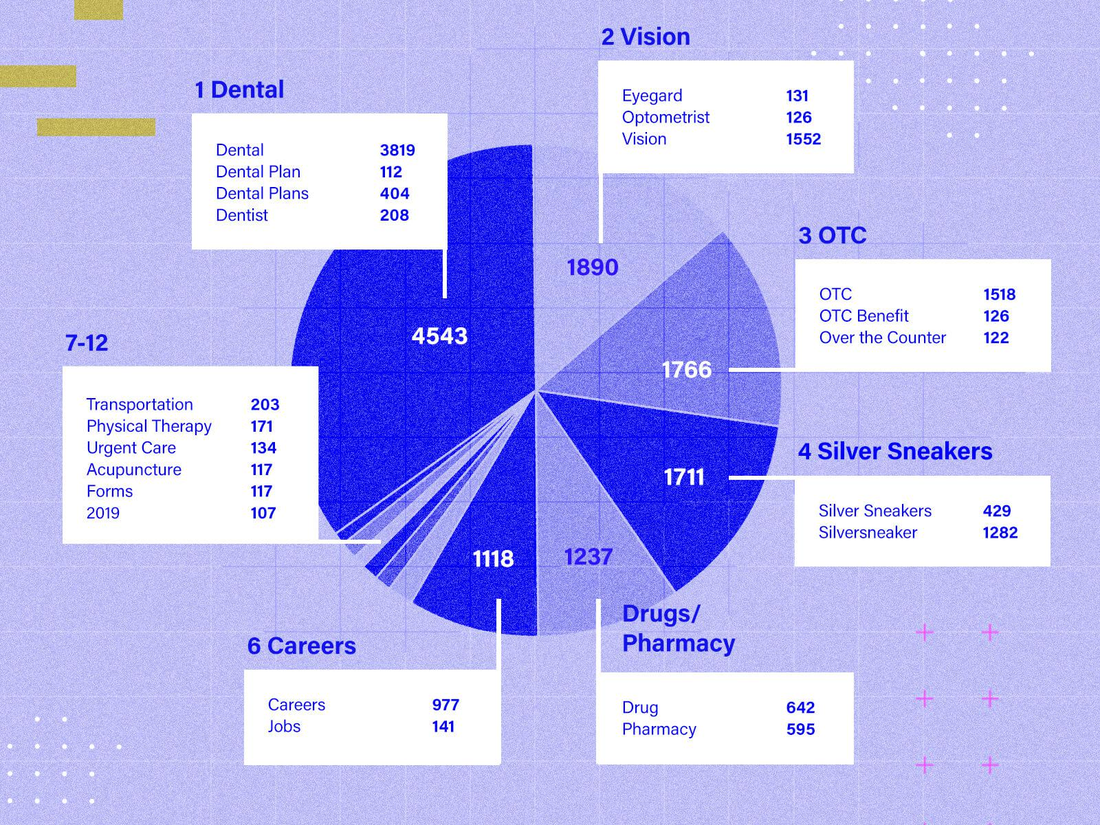

We narrowed our focus to the most frequently reported search term by dissatisfied users: “OTC”.

Next, we went to Google Analytics to see what search terms most people were entering into the search. And guess what? Our search term was the second most searched! Furthermore, the search tool did not display this term as a suggested search term, as it did with the #1 and #3 most searched terms. And as we looked at the variants across all 3 of the top terms, OTC still ranked high.

This also validated its priority in our minds. If people weren’t getting served this search term as a suggestion, then they were coming to the site with the need for that information.

Conclusion: There is enough data to validate that a significant number of people are searching by the same search term or its variant and thus likely having the same problem.

We looked at the VOC data again to see if responses provided context for their searches. And we found that often they included what type of information they were seeking. Then, we “recreated the scene” by doing a site search with the keyword in question (“OTC”)

We analyzed the search results that were served up: Do they make sense? Are they relevant?

We did a heuristic review of the first page of the search results and guess what? The results returned fell into one of two categories:

Lastly, we also searched the site with some of the keyword variants that were showing up in GA. We found that the known variants were not identified by the search engine as a synonym – people were getting wildly different results.

Conclusion: While some of the search results seemed relevant, there was extra noise to sift through. Users were forced to click on search results to see if it was actually relevant to what they were looking for.

The answer is: whatever you can get your hands on! And… follow the clues!

Just like the detective in our murder mystery analogy, who was forced to continually re-evaluate the facts. In this case, we started with VOC data, then went to GA, back to the VOC data, and then did a series of heuristic reviews.

Sometimes you have to go back to a source and look again – maybe ask different questions, view the data a bit differently or look at different data altogether. It’s unlikely that one tool or technique, alone, will give you the data and insights you are looking for.

In the words of famous tv detective, Columbo, there’s always “just one more thing…”

The original version of this page was published at: https://www.theprimacy.com/blog/what-do-ux-research-and-murder-mysteries-have-common

With online activity and total user screen time skyrocketing in 2020, you’ve likely noticed banners and pop-up messages appear when you land on the homepage of your favorite ...read more

Back in 2014, I wrote an article entitled, “Eight Things to Expect from an Agency Partner” that appeared in Aberdeen’s CMO Essentials. I hadn’t thought about ...read more

Get the latest on what’s happening with the state of Google and Meta tracking pixels — and best practices you can bring back to your team and take action on now.Last year ...read more

The proliferation of the COVID-19 virus and the worldwide response to this pandemic has been unprecedented. In the flurry of school closures, travel restrictions, business closures ...read more